Before the 2010s, Computer Vision was very different. Then something happened.

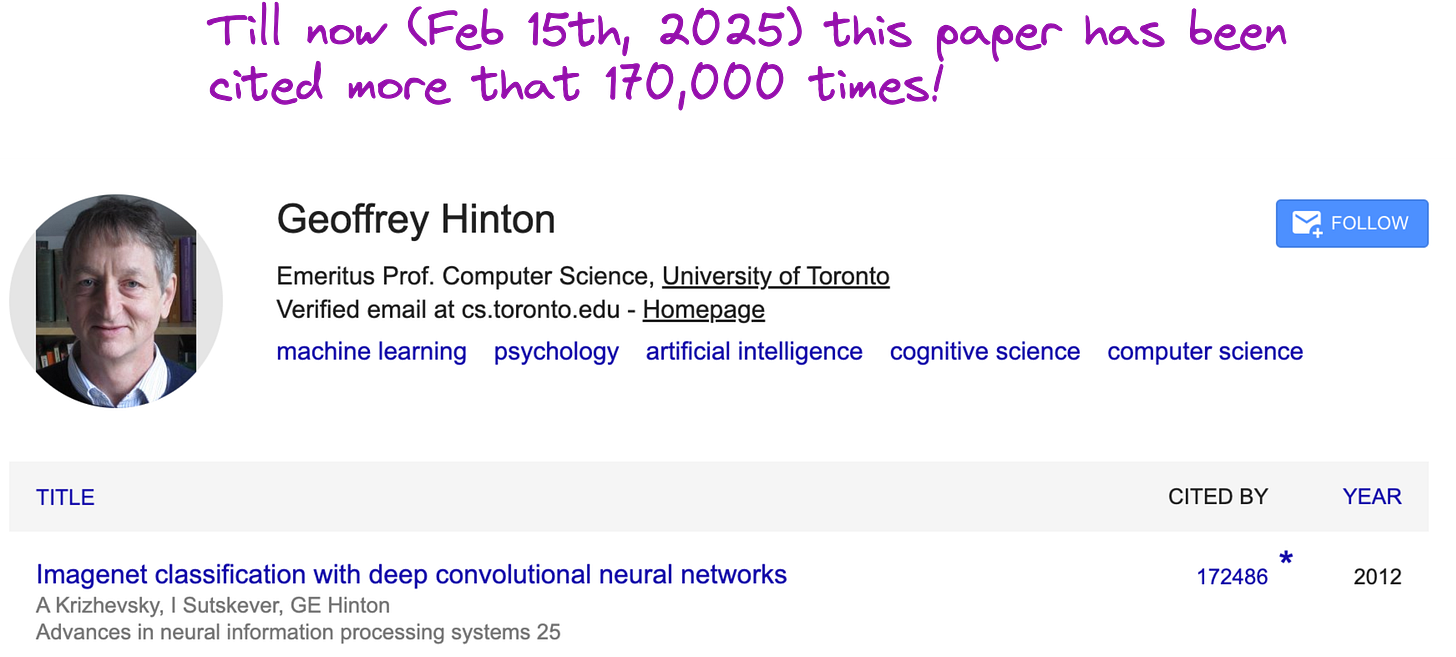

AlexNet (170,000+ citations): The architecture that propelled CV to what we see today.

Computer Vision primarily tries to teach computers to make sense of images, just like humans do.

When you see an apple, you immediately understand that it is an apple. How can you teach a computer to understand a cat present in an image? Let us discuss in this article.

Computer vision in the early days were not ML

Before 2010, computer vision tasks were performed using image filters. Filters allow you to identify specific features within an image. Some filters could be meant for edge detection, some could be meant for detecting circular shapes. These filters were manually curated.

A quick demonstration of edge detection filter

Say you want to detect the edge in this image. An edge is a place where pixel values make a sudden transition.

How do you detect sudden transition in pixel values?

You can use the below filter and traverse it over the input image (with some padding added) to get something like the expected output image.

If all pixels in a region has same or similar values, that means there is no sharp edge. Applying the below filter will make the values of those pixels close to zero. Only for those group of pixels where the values of neighbors are different, this filter gives a non-zero (or not so small) output.

Computer Vision in the early days: Traditional rule-based systems

In classical rule-based CV systems, we try to define logic (such as filters) for deciding what is a flower or what is a human from an image. Although good for specific use cases, it was very difficult to make classical expert-based systems work for a multitude of edge cases.

In Machine Learning, we let the ML model figure out the logic using large amounts of data. We don’t impose any strict logic.

Why is ML better than rule-based systems?

If you were to manually define and identify the patterns in an image that identifies a cat (ears, whiskers, eyes etc), then your entire life would be dedicated to creating billions of conditions that can happen in real life images.

What if there is a dog and a cat in an image?

What if the cat is jumping up a fence?

What if the cat is in a snow background?

Just like Ji(a)n Yang from Silicon Valley making hot-dog v/s not-hot dog labels, you will be doing boring scut work.

Think about ChatGPT. Nobody explicitly gave the LLM instructions to give a pre-determined reply. LLM figured out how language works by training on the large corpus of internet data. The only goal was to make next word prediction. Rest of the properties like ability to translate were emergent.

Similarly ML models can be trained to learn patterns in the image without explicitly telling what to look for. This is what makes the modern Deep Learning based Computer Vision so powerful and useful in applications like Tesla’s self driving cars.

Consider the example of Optical Character Recognition (OCR)

In the earliest approaches to implementing OCR algorithm for reading text from an image you have to consider various challenges such as the following.

Variability in fonts and styles

Variations in image quality

Background complexity

Text orientation and alignment

Handwritten vs. printed text

Language and script diversity

Overlapping and connected characters

Color and contrast issues

Multiline and paragraph structure

Special characters, numbers, and symbols

Rule-based (Heuristic) systems

Hand-crafted rules: For example, if a certain shape had a loop at the top and a straight line descending on the right side, it might be recognized as “p.” These heuristics could get very extensive and required a great deal of manual engineering.

Decision trees: Decision trees were sometimes used as a structured way to apply these rules (e.g., “Does the character have a closed loop? Yes/No.”).

But in deep learning approach, you don’t have to consider all these challenges as long as you have data. If you have a lot of “image-ified” text (hand-written, scanned, photocopy etc) and corresponding digitized text then you can train a deep CNN using the “image-ified” text as the input and the corresponding digitized text as the output (or label). This enables you to apply deep CNN to various applications from medical, automotive, manufacturing, and other fields.

AlexNet: The architecture that changed the history of computer vision in 2012

Image classification was very difficult in the early 2010s due to the usage of expert systems. However, in 2012 Alex Krizhevsky, Ilya Sutskever (OpenAI co-founder) and Geoffrey E. Hinton (Nobel Laureate in Physics, 2024) published a seminal paper (AlexNet) based on deep convolutional neural networks that changed the way computer vision was implemented.

Till now (Feb 15th, 2025) this paper has been cited more that 170,000 times!

So what did AlexNet accomplish?

Massive performance improvement in ImageNet challenge

AlexNet won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2012 by a huge margin with a top-5 error rate of 15.3% (2nd best model had an error of 26.2%).

Deep Neural Networks became mainstream

Before AlexNet, deep learning was mostly just a research topic

AlexNet showed that deep learning can outperform traditional methods (SVMs, random forests, etc.) when trained with enough data

Use of GPUs for training

The paper showed that GPUs could dramatically speed up deep learning.

Before this, training deep networks was too slow on CPUs

Introduced several architectural innovations in the same model

ReLU activation function (instead of sigmoid/tanh) → Faster training.

Dropout regularization → Reduced overfitting.

Overlapping max pooling → Improved feature extraction.

Data augmentation → Random cropping, mirroring, rotation

Deep Learning took over Computer Vision

Almost overnight, deep learning became the default approach for computer vision. Tasks like object detection, segmentation, and image generation started being dominated by CNNs.

Eventually, deep learning expanded into NLP, robotics, healthcare etc.

AlexNet's success in ImageNet 2012 triggered the deep learning revolution, proving that deep CNNs could outperform traditional AI approaches, paving the way for the AI boom we see today.

A common doubt: What is the difference between Machine Learning and Deep Learning?

Machine Learning (ML) is a broad field of artificial intelligence that focuses on creating algorithms capable of learning patterns from data. These algorithms can then make predictions or decisions without being explicitly programmed for every scenario. Traditional ML methods often rely on manually crafted features (feature engineering) that help the model learn.

Deep Learning (DL) is a specialized subset of machine learning that uses artificial neural networks structured in many layers (hence “deep”). Instead of requiring manual feature engineering, deep learning models learn to extract features automatically from raw data. While deep learning can deliver very powerful performance—especially in image recognition, natural language processing, and speech recognition—it typically requires large amounts of data and significant computational power (e.g., GPUs) to train effectively.

In short:

ML is the larger umbrella; DL is one of the newest, most powerful techniques within it.

Traditional ML may require substantial human effort to design features, while DL learns its own features from raw data.

DL often outperforms traditional ML methods when there is a lot of data available. However, for smaller datasets or simpler tasks, traditional ML methods might be sufficient or even preferred.

Deep Learning revolution

AlexNet almost single-handedly started the Deep Learning revolution. So what is “deep” in deep learning?

In a shallow neural network you have an input layer, one or two hidden layers, and an output layer.

In a deep neural network you have an input layer, many hidden layers (sometimes dozens or even hundreds), and an output layer. Each layer can learn increasingly abstract features from raw input.

Lecture video

I have released a lecture video on this topic on Vizuara’s YouTube channel. Do check this out. I hope you enjoy watching this lecture as much as I enjoyed making it.

What a nice article!